Information technology system world work science informatics.

The Future development of information technology system world work science informatics.

The future for a new tecnology as a science new.

The world of the tecnology computing is very important for a development to future of the job.

This opinion is made for a new way of create an resource for a development of this matter can offer a lot of work for young people.

The Informatique is very important in the world tour.

Information technology can be applied in many categories in the modern world.

The categories a Product and Services for a this bussiness are:

Information , Multimedia Communication , Services Computing , Medical Analysis with Computer Systems and Healtcare , Civil Goverment , Defense , Information Technology , Energy , Professional Comunication , Informatic Security , Arts , Education with Computer School and other Jobs ……………….

Descriptions of the technical information

Computing

Computing is any goal-oriented activity requiring, benefiting from, or creating computers. For example, computing includes designing, developing and building hardware and software systems; processing, structuring, and managing various kinds of information; doing scientific research on and with computers; making computer systems behave intelligently; creating and using communications and entertainment media etc. Subfields of computing include computer engineering, software engineering, computer science,information systems, and information technology.

Information

Information, in its general sense, is “Knowledge communicated or received concerning a particular fact or circumstance“.[citation needed] Information cannot be predicted and resolves uncertainty. The uncertainty of an event is measured by its probability of occurrence and is inversely proportional to that. The more uncertain an event is more information is required to resolve uncertainty of that event. The amount of information is measured in bits.

Example: information in one “fair” coin flip: log2(2/1) = 1 bit, and in two fair coin flips is log2(4/1) = 2 bits.

Information, in its most restricted technical sense, is a sequence of symbols that can be interpreted as a message. Information can be recorded as signs, or transmitted as signals. Information is any kind of event that affects the state of a dynamic system. Conceptually, information is the message (utterance or expression) being conveyed. The meaning of this concept varies in different contexts.[1] Moreover, the concept of information is closely related to notions of constraint, communication, control, data, form[disambiguation needed], instruction, knowledge, meaning,understanding, mental stimuli, pattern, perception, representation, and entropy.

Multimedia Communication

Multimedia is media and content that uses a combination of different content forms. This contrasts with media that use only rudimentary computer displays such as text-only or traditional forms of printed or hand-produced material. Multimedia includes a combination of text, audio, still images, animation, video, or interactivity content forms.

Multimedia is usually recorded and played, displayed, or accessed by information content processing devices, such as computerized and electronic devices, but can also be part of a live performance. Multimedia devices are electronic media devices used to store and experience multimedia content. Multimedia is distinguished from mixed media in fine art; by including audio, for example, it has a broader scope. The term “rich media” is synonymous for interactive multimedia. Hypermedia can be considered one particular multimedia application.

Services Computing

Services Computing has become a cross-discipline that covers the science and technology of bridging the gap between business services and IT services. The underneath breaking technology suite includes Web services and service-oriented architecture (SOA), cloud computing, business consulting methodology and utilities, business process modeling, transformation and integration. This scope of Services Computing covers the whole life-cycle of services innovation research that includes business componentization, services modeling, services creation, services realization, services annotation, services deployment, services discovery, services composition, services delivery, service-to-service collaboration, services monitoring, services optimization, as well as services management. The goal of Services Computing is to enable IT services and computing technology to perform business services more efficiently and effectively.

Hospital information system

These are comprehensive, integrated information systems designed to manage the medical, administrative, financial and legal aspects of a hospital and its service processing. Traditional approaches encompass paper-based information processing as well as resident work position and mobile data acquisition and presentation.

Hospital information system

One of the most important issues is health services. Hospitals provide a medical assistance to people. The best introduction for hospital information systems has been made in 2011 International Conference on Social Science and Humanity, which is; Hospital Information Systems can be defined as massive, integrated systems that support the comprehensive information requirements of hospitals, including patient, clinical, ancillary and financial management. Hospitals are extremely complex institutions with large departments and units coordinate care for patients. Hospitals are becoming more reliant on the ability of hospital information system (HIS) to assist in the diagnosis, management and education for better and improved services and practices. In health organization such as hospitals, implementation of HIS inevitable due to many mediating and dominating factors such as organization, people and technology.

Architecture

Hospital Information System architecture has three main levels, Central Government Level, Territory Level, and Patient Carrying Level. Generally all types of hospital information system (HIS) are supported in client-server architecturesfor networking and processing. Most work positions for HIS are currently resident types. Mobile computing began with wheeled PC stands. Now tablet computers and smartphone applications are used.

Enterprise HIS with Internet architectures have been successfully deployed in Public Healthcare Territories and have been widely adopted by further entities.The Hospital Information System (HIS) is a province-wide initiative designed to improve access to patient information through a central electronic information system. HIS’s goal is to streamline patient information flow and its accessibility for doctors and other health care providers. These changes in service will improve patient care quality and patient safety over time.

The patient carries system record patient information, patient laboratory test results, and patient’s doctor information. Doctors can access easily person information, test results, and previous prescriptions. Patient schedule organization and early warning systems can provide by related systems.

A cloud computing alternative is not recommended, as data security of individual patient records services are not well accepted by the public.

HIS can be composed of one or several software components with specialty-specific extensions, as well as of a large variety of sub-systems in medical specialties, for example Laboratory Information System (LIS), Policy and Procedure Management System, Radiology Information System (RIS) or Picture archiving and communication system (PACS).

CISs are sometimes separated from HISs in that one focuses the flow management and clinical-state-related data and the other focuses the patient-related data with the doctor’s letters and the electronic patient record. However, the naming differences are not standardised between suppliers.

Architecture in based on a distributed approach and on the utilization of standard software products complying with the industrial and market standards must be utilized (such as: UNIX operating systems, MS-Windows, local area network based on Ethernet and TCP/IP protocols, relational database management systems based on SQL language or Oracle databases, C programming language).

Civil Goverment

Civil authority (also known as civil government) is that apparatus of the state other than its military units that enforces law and order. It is also used to distinguish between religious authority (for example Canon law) and secularauthority. In a religious context it may be defined “as synonymous with human government, in contradistinction to a government by God, or the divine government.

Types of authority

Thus three forms of authority may be seen in States:

- Civil authority

- Military authority

- Religious authority (certain constitutions exclude the state having any religious authority)

It can also mean the moral power of command, supported (when need be) by physical coercion, which the State exercises over its members. In this view, because man can not live in isolation without being deprived of what makes him fully human, and because authority is necessary for a society to hold together, the authority has not only the power but the right to command. It is natural to man to live in society, to submit to authority, and to be governed by that custom of society which crystallizes into law, and the obedience that is required is paid to the powers that be, to the authority actually in possession. The extent of its authority is bound by the ends it has in view, and the extent to which it actually provides for the government of society.

In modern states enforcement of law and order is typically a role of the police although the line between military and civil units may be hard to distinguish; especially when militias and volunteers, such as yeomanry, act in pursuance of non-military, domestic objectives.

Defence Technology Informatics

The Information Sciences Institute (ISI) is a research and development unit of the University of Southern California‘s Viterbi School of Engineering which focuses on computer and communications technology and information processing. It helped spearhead development of the Internet, including the Domain Name System and refinement of TCP/IP communications protocols that remain fundamental to Internet operations.

ISI conducts basic and applied research for corporations and more than 20 U.S. federal government agencies. It is known for its cross-disciplinary depth, including expertise in computing organizations, interfaces, environments, grids, networks, platforms and microelectronics. ISI has more than 350 employees, approximately half with doctorate degrees; fifty are faculty at USC. The Institute has a satellite campus in Arlington, Virginia. ISI is currently led by Herbert Schorr, former executive and scientist at IBM.

ISI is one of 12 organizations that operate or host internet root name servers.

ISI’s expertise falls into three broad categories. “Intelligent systems,” where ISI has world-class artificial intelligence capabilities, include natural language, robotics, information integration and geoinformatics, and knowledge representation. “Distributed systems” cover grid information security, networking, resource management, large-scale simulation and energy megasystems, among others. “Hardware systems” involve space electronics, fine-grained computing, wireless networking, biomimetic and bio-inspired systems, and other hardware technologies.

More than 30 concurrent projects include:

- Intelligent systems: genetics research support, integrated geospatial and textual information access, automated language translation and social network dynamics.

- Distributed systems: the Defense Technology Experimental Research (DETER) testbed for Internet security, the Biomedical Informatics Research Network in health informatics, and Internet mapping efforts.

- Hardware systems: cognitive function repair via an electronic hippocampus bypass, Xray microscopy chip inspection and wireless technologies development.

Customers, startups, and spinoffs

ISI’s US government customers include the National Institutes of Health, National Science Foundation, US Geological Survey, and Departments of Agriculture, Defense, Energy, Homeland Security and Justice. Among ISI’s corporate customers, Chevron Corp and USC operate the Center for Smart Oilfield Technologies (CiSoft), a joint venture that involves robotics, artificial intelligence, sensor nets, data management and other technologies for locating, extracting and producing oil. The Institute spawned about a dozen startup and spinoff companies in grid software, geospatial information fusion, machine translation, data integration and other technologies.

Information technology

Information technology (IT) is the application of computers and telecommunications equipment to store, retrieve, transmit and manipulate data, often in the context of a business or other enterprise. The term is commonly used as a synonym for computers and computer networks, but it also encompasses other information distribution technologies such as television and telephones. Several industries are associated with information technology, such as computer hardware, software, electronics, semiconductors, internet, telecom equipment, e-commerce and computer services.

In a business context, the Information Technology Association of America has defined information technology as “the study, design, development, application, implementation, support or management of computer-based information systems”.The responsibilities of those working in the field include network administration, software development and installation, and the planning and management of an organisation’s technology life cycle, by which hardware and software is maintained, upgraded, and replaced.

Humans have been storing, retrieving, manipulating and communicating information since the Sumerians in Mesopotamia developed writing in about 3000 BC, but the term “information technology” in its modern sense first appeared in a 1958 article published in the Harvard Business Review; authors Harold J. Leavitt and Thomas L. Whisler commented that “the new technology does not yet have a single established name. We shall call it information technology (IT).”Based on the storage and processing technologies employed, it is possible to distinguish four distinct phases of IT development: pre-mechanical (3000 BC – 1450 AD), mechanical (1450–1840), electromechanical (1840–1940) and electronic (1940–present). This article focuses on the most recent period (electronic), which began in about 1940.

History of computers

Early electronic computers such as Colossus made use of punched tape, a long strip of paper on which data was represented by a series of holes, a technology now obsolete. Electronic data storage, which is used in modern computers, dates from the Second World War, when a form of delay line memory was developed to remove the clutter from radar signals, the first practical application of which was the mercury delay line. The first random-accessdigital storage device was the Williams tube, based on a standard cathode ray tube, but the information stored in it and delay line memory was volatile in that it had to be continuously refreshed, and thus was lost once power was removed. The earliest form of non-volatile computer storage was the magnetic drum, invented in 1932 and used in the Ferranti Mark 1, the world’s first commercially available general-purpose electronic computer.

Most digital data today is still stored magnetically on devices such as hard disk drives, or optically on media such as CD-ROMs. It has been estimated that the worldwide capacity to store information on electronic devices grew from less than 3 exabytes in 1986 to 295 exabytes in 2007, doubling roughly every 3 years.

Databases

Database management systems emerged in the 1960s to address the problem of storing and retrieving large amounts of data accurately and quickly. One of the earliest such systems was IBM‘s Information Management System(IMS), which is still widely deployed more than 40 years later. IMS stores data hierarchically, but in the 1970s Ted Codd proposed an alternative relational storage model based on set theory and predicate logic and the familiar concepts of tables, rows and columns. The first commercially available relational database management system (RDBMS) was available from Oracle in 1980.

All database management systems consist of a number of components that together allow the data they store to be accessed simultaneously by many users while maintaining its integrity. A characteristic of all databases is that the structure of the data they contain is defined and stored separately from the data itself, in a database schema.

The extensible markup language (XML) has become a popular format for data representation in recent years. Although XML data can be stored in normal file systems, it is commonly held in relational databases to take advantage of their “robust implementation verified by years of both theoretical and practical effort”. As an evolution of the Standard Generalized Markup Language (SGML), XML’s text-based structure offers the advantage of being both machine and human-readable.

Data retrieval

The relational database model introduced a programming-language independent Structured Query Language (SQL), based on relational algebra.

The terms “data” and “information” are not synonymous. Anything stored is data, but it only becomes information when it is organised and presented meaningfully.Most of the world’s digital data is unstructured, and stored in a variety of different physical formats even within a single organization. Data warehouses began to be developed in the 1980s to integrate these disparate stores. They typically contain data extracted from various sources, including external sources such as the Internet, organised in such a way as to facilitate decision support systems (DSS).

Data transmission

Data transmission has three aspects: transmission, propagation, and reception.

XML has been increasingly employed as a means of data interchange since the early 2000s, particularly for machine-oriented interactions such as those involved in web-oriented protocols such as SOAP, describing “data-in-transit rather than … data-at-rest”. One of the challenges of such usage is converting data from relational databases into XML Document Object Model (DOM) structures.

Data manipulation

Hilbert and Lopez identify the exponential pace of technological change (a kind of Moore’s law): machines’ application-specific capacity to compute information per capita roughly doubled every 14 months between 1986 and 2007; the per capita capacity of the world’s general-purpose computers doubled every 18 months during the same two decades; the global telecommunication capacity per capita doubled every 34 months; the world’s storage capacity per capita required roughly 40 months to double (every 3 years); and per capita broadcast information has doubled every 12.3 years.

Massive amounts of data are stored worldwide every day, but unless it can be analysed and presented effectively it essentially resides in what have been called data tombs: “data archives that are seldom visited”. To address that issue, the field of data mining – “the process of discovering interesting patterns and knowledge from large amounts of data”– emerged in the late 1980s.

Academic perspective

In an academic context, the Association for Computing Machinery defines IT as “undergraduate degree programs that prepare students to meet the computer technology needs of business, government, healthcare, schools, and other kinds of organizations …. IT specialists assume responsibility for selecting hardware and software products appropriate for an organization, integrating those products with organizational needs and infrastructure, and installing, customizing, and maintaining those applications for the organization’s computer users.”

Commercial perspective

The business value of information technology lies in the automation of business processes, provision of information for decision making, connecting businesses with their customers, and the provision of productivity tools to increase efficiency.

| Category | 2012 spending | 2013 spending |

|---|---|---|

| Devices | 627 | 666 |

| Data center systems | 141 | 147 |

| Enterprise software | 278 | 296 |

| IT services | 881 | 927 |

| Telecom services | 1,661 | 1,701 |

| Total | 3,588 | 3,737 |

National Energy Research Scientific Computing Center

The National Energy Research Scientific Computing Center, or NERSC for short, is a designated user facility operated by Lawrence Berkeley National Laboratory and the Department of Energy. It contains several cluster supercomputers, the largest of which is Hopper, which was ranked 5th on the TOP500 list of world’s fastest supercomputers in November 2010 (19th as of November 2012). It is located in Oakland, California.

History

NERSC was founded in 1974 at Lawrence Livermore National Laboratory, then called the Controlled Thermonuclear Research Computer Center or CTRCC and consisting of a Control Data Corporation 6600 computer. Over time, it expanded to contain a CDC 7600, then a Cray-1 (SN-6) which was called the “c” machine, and in 1985 the world’s first Cray-2 (SN-1) which was the “b” machine, nicknamed bubbles because of the bubbles visible in the fluid of its unique direct liquid cooling system. In the early eighties, CTRCC’s name was changed to the National Magnetic Fusion Energy Computer Center or NMFECC. The name was again changed in the early nineties to National Energy Research Supercomputer Center. In 1996 NERSC moved fromLLNL to LBNL. In 2000, it was moved to its current location in Oakland.

Computers and projects

NERSC’s fastest computer, Hopper, a Cray XE6 named in honor of Grace Hopper, a pioneer in the field of software development and programming languages and the creator of the first compiler. It has 153,308 Opteron processor cores and runs the Suse Linux operating system.

Other systems at NERSC are named Carver, Magellan, Dirac, Euclid, Tesla, Turing, and PDSF, the longest continually operating Linux cluster in the world. The facility also contains a 8.8petabyte High Performance Storage System (HPSS) installation.

NERSC facilities are accessible through the Energy Sciences Network or ESnet, which was created and is managed by NERSC.

Professional communication

Professional communication encompasses written, oral, visual and digital communication within a workplace context. This discipline blends together pedagogical principles of rhetoric, technology, and software to improve communication in a variety of settings ranging from technical writing to usability and digital media design. It is a new discipline that focuses on the study of information and the ways it is created, managed, distributed, and consumed. Since communication in modern society is a rapidly changing area, the progress of technologies seems to often outpace the number of available expert practitioners. This creates a demand for skilled communicators which continues to exceed the supply of trained professionals.

The field of professional communication is closely related to that of technical communication, though professional communication encompasses a wider variety of skills. Professional communicators use strategies, theories, and technologies to more effectively communicate in the business world.

Successful communication skills are critical to a business because all businesses, though to varying degrees, involve the following: writing, reading, editing, speaking, listening, software applications, computer graphics, and Internet research. Job candidates with professional communication backgrounds are more likely to bring to the organization sophisticated perspectives on society, culture, science, and technology.

Professional communication and theory

Professional communication draws on theories from fields as different as rhetoric and science, psychology and philosophy, sociology and linguistics.

Much of professional communication theory is a practical blend of traditional communication theory, technical writing, rhetorical theory, and ethics. According to Carolyn Miller in What’s Practical about Technical Writing? she refers to professional communication as not simply workplace activity and to writing that concerns “human conduct in those activities that maintain the life of a community.” As Nancy Roundy Blyler discusses in her article Research as Ideology in Professional Communication researchers seek to expand professional communication theory to include concerns with praxis and social responsibility.

Regarding this social aspect, in “Postmodern Practice: Perspectives and Prospects,” Richard C. Freed defines professional communication as

A. discourse directed to a group, or to an individual operating as a member of the group, with the intent of affecting the group’s function, and/or B. discourse directed from a group, or from an individual operating as a member of the group, with the intent of affecting the group’s function, where group means an entity intentionally organized and/or run by its members to perform a certain function….Primarily excluded from this definition of group would be families (who would qualify only if, for example, their group affiliation were a family business), school classes (which would qualify only if, for example, they had organized themselves to perform a function outside the classroom–for example, to complain about or praise a teacher to a school administrator), and unorganized aggregates (i.e., masses of people). Primarily excluded from the definition of professional communication would be diary entries (discourse directed toward the writer), personal correspondence (discourse directed to one or more readers apart from their group affiliations), reportage or belletristic discourse (novels, poems, occasional essays–discourse usually written by individuals and directed to multiple readers not organized as a group), most intraclassroom communications (for example, classroom discourse composed by students for teachers) and some technical communications (for example, instructions–for changing a tire, assembling a product, and the like; again, discourse directed toward readers or listeners apart from their group affiliations)….Professional communication…would seem different from discourse involving a single individual apart from a group affiliation communicating with another such person, or a single individual communicating with a large unorganized aggregate of individuals as suggested by the term mass communication (Blyler and Thralls, Professional Communication: The Social Perspective,

Professional communication journals

The IEEE Transactions on Professional Communication is a refereed quarterly journal published since 1957 by the Professional Communication Society of the Institute of Electrical and Electronics Engineers (IEEE). The readers represent engineers, technical communicators, scientists, information designers, editors, linguists, translators, managers, business professionals and others from around the globe who work as scholars, educators, and/or practitioners. The readers share a common interest in effective communication in technical workplace and academic contexts.

The journal’s research falls into three main categories: (1) the communication practices of technical professionals, such as engineers and scientists, (2) the practices of professional communicators who work in technical or business environments, and (3) research-based methods for teaching professional communication.

The Journal of Professional Communication is housed in the Department of Communication Studies & Multimedia, in the Faculty of Humanities at McMaster University in Hamilton, Ontario.

JPC is an international journal launched to explore the intersections between public relations practice, communication and new media theory, communications management, as well as digital arts and design.

Studying professional communication

The study of professional communication includes:

- the study of rhetoric which serves as a theoretical basis

- the study of technical writing which serves as a form of professional communication

- the study of visual communication which also uses rhetoric as a theoretical basis for various aspects of creating visuals

- the study of various research methods

Other areas of study include global and cross-cultural communication, marketing and public relations, technical editing, digital literacy, composition theory, video production, corporate communication, and publishing. A professional communication program may cater to a very specialized interest or to several different interests. Professional communication can also be closely tied to organizational communication.

Students who pursue graduate degrees in professional communication research communicative practice in organized contexts (including business, academic, scientific, technical, and non-profit settings) to study how communicative practices shape and are shaped by culture, technology, history, and theories of communication.

Professional communication encompasses a broad collection of disciplines, embracing a diversity of rhetorical contexts and situations. Areas of study range from everyday writing at the workplace to historical writing pedagogy, from the implications of new media for communicative practices to the theory and design of online learning, and from oral presentations to the production of websites.

- Types of professional documents

- Short reports

- Proposals

- Case studies

- Lab reports

- Memos

- Progress / Interim reports

- Writing for electronic media

Information security

Information security (sometimes shortened to InfoSec) is the practice of defending information from unauthorized access, use, disclosure, disruption, modification, perusal, inspection, recording or destruction. It is a general term that can be used regardless of the form the data may take (electronic, physical, etc…)

Two major aspects of information security are:

IT security: Sometimes referred to as computer security, Information Technology Security is information security applied to technology (most often some form of computer system). It is worthwhile to note that a computer does not necessarily mean a home desktop. A computer is any device with a processor and some memory (even a calculator). IT security specialists are almost always found in any major enterprise/establishment due to the nature and value of the data within larger businesses. They are responsible for keeping all of the technology within the company secure from malicious cyber attacks that often attempt to breach into critical private information or gain control of the internal systems.

Information assurance: The act of ensuring that data is not lost when critical issues arise. These issues include but are not limited to; natural disasters, computer/server malfunction, physical theft, or any other instance where data has the potential of being lost. Since most information is stored on computers in our modern era, information assurance is typically dealt with by IT security specialists. One of the most common methods of providing information assurance is to have an off-site backup of the data in case one of the mentioned issues arise.

Governments, military, corporations, financial institutions, hospitals, and private businesses amass a great deal of confidential information about their employees, customers, products, research and financial status. Most of this information is now collected, processed and stored on electronic computers and transmitted across networks to other computers.

Should confidential information about a business’ customers or finances or new product line fall into the hands of a competitor or a black hat hacker, such a breach of security could lead to exploited data and/or information, exploited staff/personnel, fraud, theft, and information leaks. Also, irreparable data loss and system instability can result from malicious access to confidential data and systems.[clarify] Protecting confidential information is a business requirement, and in many cases also an ethical and legal requirement.

For the individual, information security has a significant effect on privacy, which is viewed very differently in different cultures.

The field of information security has grown and evolved significantly in recent years. There are many ways of gaining entry into the field as a career. It offers many areas for specialization including: securing network(s) and allied infrastructure, securing applications and databases, security testing, information systems auditing, business continuity planning and digital forensics, etc.

This article presents a general overview of information security and its core concepts.

History

Since the early days of writing, politicians, diplomats and military commanders understood that it was necessary to provide some mechanism to protect the confidentiality of correspondence and to have some means of detectingtampering. Julius Caesar is credited with the invention of the Caesar cipher ca. 50 B.C., which was created in order to prevent his secret messages from being read should a message fall into the wrong hands, but for the most part protection was achieved through the application of procedural handling controls. Sensitive information was marked up to indicate that it should be protected and transported by trusted persons, guarded and stored in a secure environment or strong box. As postal services expanded, governments created official organisations to intercept, decipher, read and reseal letters (e.g. the UK Secret Office and Deciphering Branch in 1653).

In the mid 19th century more complex classification systems were developed to allow governments to manage their information according to the degree of sensitivity. The British Government codified this, to some extent, with the publication of the Official Secrets Act in 1889. By the time of the First World War, multi-tier classification systems were used to communicate information to and from various fronts, which encouraged greater use of code making and breaking sections in diplomatic and military headquarters. In the United Kingdom this led to the creation of the Government Codes and Cypher School in 1919. Encoding became more sophisticated between the wars as machines were employed to scramble and unscramble information. The volume of information shared by the Allied countries during the Second World War necessitated formal alignment of classification systems and procedural controls. An arcane range of markings evolved to indicate who could handle documents (usually officers rather than men) and where they should be stored as increasingly complex safes and storage facilities were developed. Procedures evolved to ensure documents were destroyed properly and it was the failure to follow these procedures which led to some of the greatest intelligence coups of the war (e.g. U-570).

The end of the 20th century and early years of the 21st century saw rapid advancements in telecommunications, computing hardware and software, and data encryption. The availability of smaller, more powerful and less expensive computing equipment made electronic data processing within the reach of small business and the home user. These computers quickly became interconnected through a network generically called the Internet.

The rapid growth and widespread use of electronic data processing and electronic business conducted through the Internet, along with numerous occurrences of international terrorism, fueled the need for better methods of protecting the computers and the information they store, process and transmit. The academic disciplines of computer security and information assurance emerged along with numerous professional organizations – all sharing the common goals of ensuring the security and reliability of information systems.

Basic principles

Key concepts

The CIA triad (confidentiality, integrity and availability) is one of the core principles of information security.

There is continuous debate about extending this classic trio. Other principles such as Accountability have sometimes been proposed for addition – it has been pointed out[citation needed] that issues such as Non-Repudiation do not fit well within the three core concepts, and as regulation of computer systems has increased (particularly amongst the Western nations) Legality is becoming a key consideration for practical security installations.[citation needed]

In 1992 and revised in 2002 the OECD‘s Guidelines for the Security of Information Systems and Networks proposed the nine generally accepted principles: Awareness, Responsibility, Response, Ethics, Democracy, Risk Assessment, Security Design and Implementation, Security Management, and Reassessment. Building upon those, in 2004 the NIST‘s Engineering Principles for Information Technology Security proposed 33 principles. From each of these derived guidelines and practices.

In 2002, Donn Parker proposed an alternative model for the classic CIA triad that he called the six atomic elements of information. The elements are confidentiality, possession, integrity, authenticity, availability, and utility. The merits of the Parkerian hexad are a subject of debate amongst security professionals.[citation needed]

Confidentiality

Confidentiality refers to preventing the disclosure of information to unauthorized individuals or systems. For example, a credit card transaction on the Internet requires the credit card number to be transmitted from the buyer to the merchant and from the merchant to a transaction processing network. The system attempts to enforce confidentiality by encrypting the card number during transmission, by limiting the places where it might appear (in databases, log files, backups, printed receipts, and so on), and by restricting access to the places where it is stored. If an unauthorized party obtains the card number in any way, a breach of confidentiality has occurred.

Confidentiality is necessary for maintaining the privacy of the people whose personal information a system holds.

Integrity

In information security, data integrity means maintaining and assuring the accuracy and consistency of data over its entire life-cycle.This means that data cannot be modified in an unauthorized or undetected manner. This is not the same thing as referential integrity in databases, although it can be viewed as a special case of Consistency as understood in the classic ACID model of transaction processing. Integrity is violated when a message is actively modified in transit. Information security systems typically provide message integrity in addition to data confidentiality.

Availability

For any information system to serve its purpose, the information must be available when it is needed. This means that the computing systems used to store and process the information, the security controls used to protect it, and the communication channels used to access it must be functioning correctly. High availability systems aim to remain available at all times, preventing service disruptions due to power outages, hardware failures, and system upgrades. Ensuring availability also involves preventing denial-of-service attacks.

Authenticity

In computing, e-Business, and information security, it is necessary to ensure that the data, transactions, communications or documents (electronic or physical) are genuine. It is also important for authenticity to validate that both parties involved are who they claim to be. Some information security systems incorporate authentication features such as “digital signatures”, which give evidence that the message data is genuine and was sent by someone possessing the proper signing key.

Non-repudiation

In law, non-repudiation implies one’s intention to fulfill their obligations to a contract. It also implies that one party of a transaction cannot deny having received a transaction nor can the other party deny having sent a transaction.

It is important to note that while technology such as cryptographic systems can assist in non-repudiation efforts, the concept is at its core a legal concept transcending the realm of technology. It is not, for instance, sufficient to show that the message matches a digital signature signed with the sender’s private key, and thus only the sender could have sent the message and nobody else could have altered it in transit. The alleged sender could in return demonstrate that the digital signature algorithm is vulnerable or flawed, or allege or prove that his signing key has been compromised. The fault for these violations may or may not lie with the sender himself, and such assertions may or may not relieve the sender of liability, but the assertion would invalidate the claim that the signature necessarily proves authenticity and integrity and thus prevents repudiation.

Information security

Information security analysts are information technology (IT) specialists who are accountable for safeguarding all data and communications that are stored and shared in network systems. In the financial industry, for example, information security analysts might continually upgrade firewalls that prohibit superfluous access to sensitive business data and might perform defencelessness tests to assess the effectiveness of security measures.

Electronic commerce uses technology such as digital signatures and public key encryption to establish authenticity and non-repudiation.

Risk management

The Certified Information Systems Auditor (CISA) Review Manual 2006 provides the following definition of risk management: “Risk management is the process of identifying vulnerabilities and threats to the information resources used by an organization in achieving business objectives, and deciding what countermeasures, if any, to take in reducing risk to an acceptable level, based on the value of the information resource to the organization.

There are two things in this definition that may need some clarification. First, the process of risk management is an ongoing, iterative process. It must be repeated indefinitely. The business environment is constantly changing and newthreats and vulnerabilities emerge every day. Second, the choice of countermeasures (controls) used to manage risks must strike a balance between productivity, cost, effectiveness of the countermeasure, and the value of the informational asset being protected.

Risk analysis and risk evaluation processes have their limitations since, when security incidents occur, they emerge in a context, and their rarity and even their uniqueness give rise to unpredictable threats. The analysis of these phenomena which are characterized by breakdowns, surprises and side-effects, requires a theoretical approach which is able to examine and interpret subjectively the detail of each incident.

Risk is the likelihood that something bad will happen that causes harm to an informational asset (or the loss of the asset). A vulnerability is a weakness that could be used to endanger or cause harm to an informational asset. Athreat is anything (manmade or act of nature) that has the potential to cause harm.

The likelihood that a threat will use a vulnerability to cause harm creates a risk. When a threat does use a vulnerability to inflict harm, it has an impact. In the context of information security, the impact is a loss of availability, integrity, and confidentiality, and possibly other losses (lost income, loss of life, loss of real property). It should be pointed out that it is not possible to identify all risks, nor is it possible to eliminate all risk. The remaining risk is called “residual risk”.

A risk assessment is carried out by a team of people who have knowledge of specific areas of the business. Membership of the team may vary over time as different parts of the business are assessed. The assessment may use a subjective qualitative analysis based on informed opinion, or where reliable dollar figures and historical information is available, the analysis may use quantitative analysis.

The research has shown that the most vulnerable point in most information systems is the human user, operator, designer, or other human The ISO/IEC 27002:2005 Code of practice for information security management recommends the following be examined during a risk assessment:

- security policy,

- organization of information security,

- asset management,

- human resources security,

- physical and environmental security,

- communications and operations management,

- access control,

- information systems acquisition, development and maintenance,

- information security incident management,

- business continuity management, and

- regulatory compliance.

In broad terms, the risk management process consists of:

- Identification of assets and estimating their value. Include: people, buildings, hardware, software, data (electronic, print, other), supplies.

- Conduct a threat assessment. Include: Acts of nature, acts of war, accidents, malicious acts originating from inside or outside the organization.

- Conduct a vulnerability assessment, and for each vulnerability, calculate the probability that it will be exploited. Evaluate policies, procedures, standards, training, physical security, quality control, technical security.

- Calculate the impact that each threat would have on each asset. Use qualitative analysis or quantitative analysis.

- Identify, select and implement appropriate controls. Provide a proportional response. Consider productivity, cost effectiveness, and value of the asset.

- Evaluate the effectiveness of the control measures. Ensure the controls provide the required cost effective protection without discernible loss of productivity.

For any given risk, management can choose to accept the risk based upon the relative low value of the asset, the relative low frequency of occurrence, and the relative low impact on the business. Or, leadership may choose tomitigate the risk by selecting and implementing appropriate control measures to reduce the risk. In some cases, the risk can be transferred to another business by buying insurance or outsourcing to another business.[10] The reality of some risks may be disputed. In such cases leadership may choose to deny the risk.

Controls

When management chooses to mitigate a risk, they will do so by implementing one or more of three different types of controls.

Administrative

Administrative controls (also called procedural controls) consist of approved written policies, procedures, standards and guidelines. Administrative controls form the framework for running the business and managing people. They inform people on how the business is to be run and how day to day operations are to be conducted. Laws and regulations created by government bodies are also a type of administrative control because they inform the business. Some industry sectors have policies, procedures, standards and guidelines that must be followed – the Payment Card Industry (PCI) Data Security Standard required by Visa and MasterCard is such an example. Other examples of administrative controls include the corporate security policy, password policy, hiring policies, and disciplinary policies.

Administrative controls form the basis for the selection and implementation of logical and physical controls. Logical and physical controls are manifestations of administrative controls. Administrative controls are of paramount importance.

Logical

Logical controls (also called technical controls) use software and data to monitor and control access to information and computing systems. For example: passwords, network and host based firewalls, network intrusion detectionsystems, access control lists, and data encryption are logical controls.

An important logical control that is frequently overlooked is the principle of least privilege. The principle of least privilege requires that an individual, program or system process is not granted any more access privileges than are necessary to perform the task. A blatant example of the failure to adhere to the principle of least privilege is logging into Windows as user Administrator to read Email and surf the Web. Violations of this principle can also occur when an individual collects additional access privileges over time. This happens when employees’ job duties change, or they are promoted to a new position, or they transfer to another department. The access privileges required by their new duties are frequently added onto their already existing access privileges which may no longer be necessary or appropriate.

Physical

Physical controls monitor and control the environment of the work place and computing facilities. They also monitor and control access to and from such facilities. For example: doors, locks, heating and air conditioning, smoke and fire alarms, fire suppression systems, cameras, barricades, fencing, security guards, cable locks, etc. Separating the network and workplace into functional areas are also physical controls.

An important physical control that is frequently overlooked is the separation of duties. Separation of duties ensures that an individual can not complete a critical task by himself. For example: an employee who submits a request for reimbursement should not also be able to authorize payment or print the check. An applications programmer should not also be the server administrator or the database administrator – these roles and responsibilities must be separated from one another.

Defense in depth

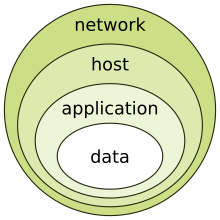

Information security must protect information throughout the life span of the information, from the initial creation of the information on through to the final disposal of the information. The information must be protected while in motion and while at rest. During its lifetime, information may pass through many different information processing systems and through many different parts of information processing systems. There are many different ways the information and information systems can be threatened. To fully protect the information during its lifetime, each component of the information processing system must have its own protection mechanisms. The building up, layering on and overlapping of security measures is called defense in depth. The strength of any system is no greater than its weakest link. Using a defense in depth strategy, should one defensive measure fail there are other defensive measures in place that continue to provide protection.

Recall the earlier discussion about administrative controls, logical controls, and physical controls. The three types of controls can be used to form the basis upon which to build a defense-in-depth strategy. With this approach, defense-in-depth can be conceptualized as three distinct layers or planes laid one on top of the other. Additional insight into defense-in- depth can be gained by thinking of it as forming the layers of an onion, with data at the core of the onion, people the next outer layer of the onion, and network security, host-based security and application security forming the outermost layers of the onion. Both perspectives are equally valid and each provides valuable insight into the implementation of a good defense-in-depth strategy.

Security classification for information

An important aspect of information security and risk management is recognizing the value of information and defining appropriate procedures and protection requirements for the information. Not all information is equal and so not all information requires the same degree of protection. This requires information to be assigned a security classification.

The first step in information classification is to identify a member of senior management as the owner of the particular information to be classified. Next, develop a classification policy. The policy should describe the different classification labels, define the criteria for information to be assigned a particular label, and list the required security controls for each classification.

Some factors that influence which classification information should be assigned include how much value that information has to the organization, how old the information is and whether or not the information has become obsolete. Laws and other regulatory requirements are also important considerations when classifying information.

The Business Model for Information Security enables security professionals to examine security from systems perspective, creating an environment where security can be managed holistically, allowing actual risks to be addressed.

The type of information security classification labels selected and used will depend on the nature of the organization, with examples being:

- In the business sector, labels such as: Public, Sensitive, Private, Confidential.

- In the government sector, labels such as: Unclassified, Sensitive But Unclassified, Restricted, Confidential, Secret, Top Secret and their non-English equivalents.

- In cross-sectoral formations, the Traffic Light Protocol, which consists of: White, Green, Amber, and Red.

All employees in the organization, as well as business partners, must be trained on the classification schema and understand the required security controls and handling procedures for each classification. The classification of a particular information asset that has been assigned should be reviewed periodically to ensure the classification is still appropriate for the information and to ensure the security controls required by the classification are in place.

Access control

Access to protected information must be restricted to people who are authorized to access the information. The computer programs, and in many cases the computers that process the information, must also be authorized. This requires that mechanisms be in place to control the access to protected information. The sophistication of the access control mechanisms should be in parity with the value of the information being protected – the more sensitive or valuable the information the stronger the control mechanisms need to be. The foundation on which access control mechanisms are built start with identification and authentication.

Identification is an assertion of who someone is or what something is. If a person makes the statement “Hello, my name is John Doe” they are making a claim of who they are. However, their claim may or may not be true. Before John Doe can be granted access to protected information it will be necessary to verify that the person claiming to be John Doe really is John Doe.

Authentication is the act of verifying a claim of identity. When John Doe goes into a bank to make a withdrawal, he tells the bank teller he is John Doe—a claim of identity. The bank teller asks to see a photo ID, so he hands the teller his driver’s license. The bank teller checks the license to make sure it has John Doe printed on it and compares the photograph on the license against the person claiming to be John Doe. If the photo and name match the person, then the teller has authenticated that John Doe is who he claimed to be.

There are three different types of information that can be used for authentication:

- Something you know: things such as a PIN, a password, or your mother’s maiden name.

- Something you have: a driver’s license or a magnetic swipe card.

- Something you are: biometrics, including palm prints, fingerprints, voice prints and retina (eye) scans.

Strong authentication requires providing more than one type of authentication information (two-factor authentication). The username is the most common form of identification on computer systems today and the password is the most common form of authentication. Usernames and passwords have served their purpose but in our modern world they are no longer adequate. Usernames and passwords are slowly being replaced with more sophisticated authentication mechanisms.

After a person, program or computer has successfully been identified and authenticated then it must be determined what informational resources they are permitted to access and what actions they will be allowed to perform (run, view, create, delete, or change). This is called authorization. Authorization to access information and other computing services begins with administrative policies and procedures. The policies prescribe what information and computing services can be accessed, by whom, and under what conditions. The access control mechanisms are then configured to enforce these policies. Different computing systems are equipped with different kinds of access control mechanisms—some may even offer a choice of different access control mechanisms. The access control mechanism a system offers will be based upon one of three approaches to access control or it may be derived from a combination of the three approaches.

The non-discretionary approach consolidates all access control under a centralized administration. The access to information and other resources is usually based on the individuals function (role) in the organization or the tasks the individual must perform. The discretionary approach gives the creator or owner of the information resource the ability to control access to those resources. In the Mandatory access control approach, access is granted or denied basing upon the security classification assigned to the information resource.

Examples of common access control mechanisms in use today include role-based access control available in many advanced database management systems—simple file permissions provided in the UNIX and Windows operating systems, Group Policy Objects provided in Windows network systems, Kerberos, RADIUS, TACACS, and the simple access lists used in many firewalls and routers.

To be effective, policies and other security controls must be enforceable and upheld. Effective policies ensure that people are held accountable for their actions. All failed and successful authentication attempts must be logged, and all access to information must leave some type of audit trail.

Also, need-to-know principle needs to be in affect when talking about access control. Need-to-know principle gives access rights to a person to perform their job functions. This principle is used in the government, when dealing with difference clearances. Even though two employees in different departments have a top-secret clearance, they must have a need-to-know in order for information to be exchanged. Within the need-to-know principle, network administrators grant the employee least amount privileges to prevent employees access and doing more than what they are supposed to. Need-to-know helps to enforce the confidential-integrity-availability (C‑I‑A) triad. Need-to-know directly impacts the confidential area of the triad.

Cryptography

Information security uses cryptography to transform usable information into a form that renders it unusable by anyone other than an authorized user; this process is called encryption. Information that has been encrypted (rendered unusable) can be transformed back into its original usable form by an authorized user, who possesses the cryptographic key, through the process of decryption. Cryptography is used in information security to protect information from unauthorized or accidental disclosure while the information is in transit (either electronically or physically) and while information is in storage.

Cryptography provides information security with other useful applications as well including improved authentication methods, message digests, digital signatures, non-repudiation, and encrypted network communications. Older less secure applications such as telnet and ftp are slowly being replaced with more secure applications such as ssh that use encrypted network communications. Wireless communications can be encrypted using protocols such asWPA/WPA2 or the older (and less secure) WEP. Wired communications (such as ITU‑T G.hn) are secured using AES for encryption and X.1035 for authentication and key exchange. Software applications such as GnuPG or PGP can be used to encrypt data files and Email.

Cryptography can introduce security problems when it is not implemented correctly. Cryptographic solutions need to be implemented using industry accepted solutions that have undergone rigorous peer review by independent experts in cryptography. The length and strength of the encryption key is also an important consideration. A key that is weak or too short will produce weak encryption. The keys used for encryption and decryption must be protected with the same degree of rigor as any other confidential information. They must be protected from unauthorized disclosure and destruction and they must be available when needed. Public key infrastructure (PKI) solutions address many of the problems that surround key management.

Process

The terms reasonable and prudent person, due care and due diligence have been used in the fields of Finance, Securities, and Law for many years. In recent years these terms have found their way into the fields of computing and information security. U.S.A. Federal Sentencing Guidelines now make it possible to hold corporate officers liable for failing to exercise due care and due diligence in the management of their information systems.

In the business world, stockholders, customers, business partners and governments have the expectation that corporate officers will run the business in accordance with accepted business practices and in compliance with laws and other regulatory requirements. This is often described as the “reasonable and prudent person” rule. A prudent person takes due care to ensure that everything necessary is done to operate the business by sound business principles and in a legal ethical manner. A prudent person is also diligent (mindful, attentive, and ongoing) in their due care of the business.

In the field of Information Security, Harris offers the following definitions of due care and due diligence:

“Due care are steps that are taken to show that a company has taken responsibility for the activities that take place within the corporation and has taken the necessary steps to help protect the company, its resources, and employees.” And, [Due diligence are the] “continual activities that make sure the protection mechanisms are continually maintained and operational.”

Attention should be made to two important points in these definitions. First, in due care, steps are taken to show – this means that the steps can be verified, measured, or even produce tangible artifacts. Second, in due diligence, there are continual activities – this means that people are actually doing things to monitor and maintain the protection mechanisms, and these activities are ongoing.

Security governance

The Software Engineering Institute at Carnegie Mellon University, in a publication titled “Governing for Enterprise Security (GES)”, defines characteristics of effective security governance. These include:

- An enterprise-wide issue

- Leaders are accountable

- Viewed as a business requirement

- Risk-based

- Roles, responsibilities, and segregation of duties defined

- Addressed and enforced in policy

- Adequate resources committed

- Staff aware and trained

- A development life cycle requirement

- Planned, managed, measurable, and measured

- Reviewed and audited

Incident response plans

1 to 3 paragraphs (non technical) that discuss:

- Selecting team members

- Define roles, responsibilities and lines of authority

- Define a security incident

- Define a reportable incident

- Training

- Detection

- Classification

- Escalation

- Containment

- Eradication

- Documentation

Change management

Any change to the information processing environment introduces an element of risk. Even apparently simple changes can have unexpected effects. One of Management’s many responsibilities is the management of risk. Change management is a tool for managing the risks introduced by changes to the information processing environment. Part of the change management process ensures that changes are not implemented at inopportune times when they may disrupt critical business processes or interfere with other changes being implemented.

Not every change needs to be managed. Some kinds of changes are a part of the everyday routine of information processing and adhere to a predefined procedure, which reduces the overall level of risk to the processing environment. Creating a new user account or deploying a new desktop computer are examples of changes that do not generally require change management. However, relocating user file shares, or upgrading the Email server pose a much higher level of risk to the processing environment and are not a normal everyday activity. The critical first steps in change management are (a) defining change (and communicating that definition) and (b) defining the scope of the change system.

Change management is usually overseen by a Change Review Board composed of representatives from key business areas, security, networking, systems administrators, Database administration, applications development, desktop support and the help desk. The tasks of the Change Review Board can be facilitated with the use of automated work flow application. The responsibility of the Change Review Board is to ensure the organizations documented change management procedures are followed. The change management process is as follows:

- Requested: Anyone can request a change. The person making the change request may or may not be the same person that performs the analysis or implements the change. When a request for change is received, it may undergo a preliminary review to determine if the requested change is compatible with the organizations business model and practices, and to determine the amount of resources needed to implement the change.

- Approved: Management runs the business and controls the allocation of resources therefore, Management must approve requests for changes and assign a priority for every change. Management might choose to reject a change request if the change is not compatible with the business model, industry standards or best practices. Management might also choose to reject a change request if the change requires more resources than can be allocated for the change.

- Planned: Planning a change involves discovering the scope and impact of the proposed change; analyzing the complexity of the change; allocation of resources and, developing, testing and documenting both implementation and backout plans. Need to define the criteria on which a decision to back out will be made.

- Tested: Every change must be tested in a safe test environment, which closely reflects the actual production environment, before the change is applied to the production environment. The backout plan must also be tested.

- Scheduled: Part of the change review board’s responsibility is to assist in the scheduling of changes by reviewing the proposed implementation date for potential conflicts with other scheduled changes or critical business activities.

- Communicated: Once a change has been scheduled it must be communicated. The communication is to give others the opportunity to remind the change review board about other changes or critical business activities that might have been overlooked when scheduling the change. The communication also serves to make the Help Desk and users aware that a change is about to occur. Another responsibility of the change review board is to ensure that scheduled changes have been properly communicated to those who will be affected by the change or otherwise have an interest in the change.

- Implemented: At the appointed date and time, the changes must be implemented. Part of the planning process was to develop an implementation plan, testing plan and, a back out plan. If the implementation of the change should fail or, the post implementation testing fails or, other “drop dead” criteria have been met, the back out plan should be implemented.

- Documented: All changes must be documented. The documentation includes the initial request for change, its approval, the priority assigned to it, the implementation, testing and back out plans, the results of the change review board critique, the date/time the change was implemented, who implemented it, and whether the change was implemented successfully, failed or postponed.

- Post change review: The change review board should hold a post implementation review of changes. It is particularly important to review failed and backed out changes. The review board should try to understand the problems that were encountered, and look for areas for improvement.

Change management procedures that are simple to follow and easy to use can greatly reduce the overall risks created when changes are made to the information processing environment. Good change management procedures improve the overall quality and success of changes as they are implemented. This is accomplished through planning, peer review, documentation and communication.

ISO/IEC 20000, The Visible OPS Handbook: Implementing ITIL in 4 Practical and Auditable Steps(Full book summary), and Information Technology Infrastructure Library all provide valuable guidance on implementing an efficient and effective change management program information security.

Business continuity

Unlike what most people think business continuity is not necessarily an IT system or process, simply because it is about the business. Today disasters or disruptions to business are a reality. Whether the disaster is natural or man-made, it affects normal life and so business. So why is planning so important? Let us face reality that “all businesses recover”, whether they planned for recovery or not, simply because business is about earning money for survival.Business continuity is the mechanism by which an organization continues to operate its critical business units, during planned or unplanned disruptions that affect normal business operations, by invoking planned and managed procedures.

The planning is merely getting better prepared to face it, knowing fully well that the best plans may fail. Planning helps to reduce cost of recovery, operational overheads and most importantly sail through some smaller ones effortlessly.

For businesses to create effective plans they need to focus upon the following key questions. Most of these are common knowledge, and anyone can do a BCP.

- Should a disaster strike, what are the first few things that I should do? Should I call people to find if they are OK or call up the bank to figure out my money is safe? This is Emergency Response. Emergency Response services help take the first hit when the disaster strikes and if the disaster is serious enough the Emergency Response teams need to quickly get a Crisis Management team in place.

- What parts of my business should I recover first? The one that brings me most money or the one where I spend the most, or the one that will ensure I shall be able to get sustained future growth? The identified sections are the critical business units. There is no magic bullet here, no one answer satisfies all. Businesses need to find answers that meet business requirements.

- How soon should I target to recover my critical business units? In BCP technical jargon this is called Recovery Time Objective, or RTO. This objective will define what costs the business will need to spend to recover from a disruption. For example, it is cheaper to recover a business in 1 day than in 1 hour.

- What all do I need to recover the business? IT, machinery, records…food, water, people…So many aspects to dwell upon. The cost factor becomes clearer now…Business leaders need to drive business continuity. Hold on. My IT manager spent $200000 last month and created a DRP (Disaster Recovery Plan), whatever happened to that? a DRP is about continuing an IT system, and is one of the sections of a comprehensive Business Continuity Plan. Look below for more on this.

- And where do I recover my business from… Will the business center give me space to work, or would it be flooded by many people queuing up for the same reasons that I am.

- But once I do recover from the disaster and work in reduced production capacity, since my main operational sites are unavailable, how long can this go on. How long can I do without my original sites, systems, people? this defines the amount of business resilience a business may have.

- Now that I know how to recover my business. How do I make sure my plan works? Most BCP pundits would recommend testing the plan at least once a year, reviewing it for adequacy and rewriting or updating the plans either annually or when businesses change.

Disaster recovery planning

While a business continuity plan (BCP) takes a broad approach to dealing with organizational-wide effects of a disaster, a disaster recovery plan (DRP), which is a subset of the business continuity plan, is instead focused on taking the necessary steps to resume normal business operations as quickly as possible. A disaster recovery plan is executed immediately after the disaster occurs and details what steps are to be taken in order to recover critical information technology infrastructure. Disaster recovery planning includes establishing a planning group, performing risk assessment, establishing priorities, developing recovery strategies, preparing inventories and documentation of the plan, developing verification criteria and procedure, and lastly implementing the plan.

Laws and regulations

Privacy International 2007 privacy ranking

green: Protections and safeguards

red: Endemic surveillance societies

Below is a partial listing of European, United Kingdom, Canadian and USA governmental laws and regulations that have, or will have, a significant effect on data processing and information security. Important industry sector regulations have also been included when they have a significant impact on information security.

- UK Data Protection Act 1998 makes new provisions for the regulation of the processing of information relating to individuals, including the obtaining, holding, use or disclosure of such information. The European Union Data Protection Directive (EUDPD) requires that all EU member must adopt national regulations to standardize the protection of data privacy for citizens throughout the EU.

- The Computer Misuse Act 1990 is an Act of the UK Parliament making computer crime (e.g. hacking) a criminal offence. The Act has become a model upon which several other countries including Canada and the Republic of Ireland have drawn inspiration when subsequently drafting their own information security laws.

- EU Data Retention laws requires Internet service providers and phone companies to keep data on every electronic message sent and phone call made for between six months and two years.

- The Family Educational Rights and Privacy Act (FERPA) (20 U.S.C. § 1232 g; 34 CFR Part 99) is a USA Federal law that protects the privacy of student education records. The law applies to all schools that receive funds under an applicable program of the U.S. Department of Education. Generally, schools must have written permission from the parent or eligible student in order to release any information from a student’s education record.

- Federal Financial Institutions Examination Council’s (FFIEC) security guidelines for auditors specifies requirements for online banking security.

- Health Insurance Portability and Accountability Act (HIPAA) of 1996 requires the adoption of national standards for electronic health care transactions and national identifiers for providers, health insurance plans, and employers. And, it requires health care providers, insurance providers and employers to safeguard the security and privacy of health data.

- Gramm-Leach-Bliley Act of 1999 (GLBA), also known as the Financial Services Modernization Act of 1999, protects the privacy and security of private financial information that financial institutions collect, hold, and process.

- Sarbanes–Oxley Act of 2002 (SOX). Section 404 of the act requires publicly traded companies to assess the effectiveness of their internal controls for financial reporting in annual reports they submit at the end of each fiscal year. Chief information officers are responsible for the security, accuracy and the reliability of the systems that manage and report the financial data. The act also requires publicly traded companies to engage independent auditors who must attest to, and report on, the validity of their assessments.

- Payment Card Industry Data Security Standard (PCI DSS) establishes comprehensive requirements for enhancing payment account data security. It was developed by the founding payment brands of the PCI Security Standards Council, including American Express, Discover Financial Services, JCB, MasterCard Worldwide and Visa International, to help facilitate the broad adoption of consistent data security measures on a global basis. The PCI DSS is a multifaceted security standard that includes requirements for security management, policies, procedures, network architecture, software design and other critical protective measures.

- State Security Breach Notification Laws (California and many others) require businesses, nonprofits, and state institutions to notify consumers when unencrypted “personal information” may have been compromised, lost, or stolen.

- Personal Information Protection and Electronics Document Act (PIPEDA) – An Act to support and promote electronic commerce by protecting personal information that is collected, used or disclosed in certain circumstances, by providing for the use of electronic means to communicate or record information or transactions and by amending the Canada Evidence Act, the Statutory Instruments Act and the Statute Revision Act.

Sources of standards